What is a Hyperscale Data Center? (pt)

It is a large-scale distributed computing center that supports high volume data processing, computing, and storage services. The term “hyperscale” refers to data center size as well as the ability to scale up capacity in response to demand. The hyperscale data centers definition by the International Data Corporation (IDC) states that a data center must utilize at least 5,000 servers and 10,000 square feet of floor space to be classified as hyperscale, although many centers are significantly larger.

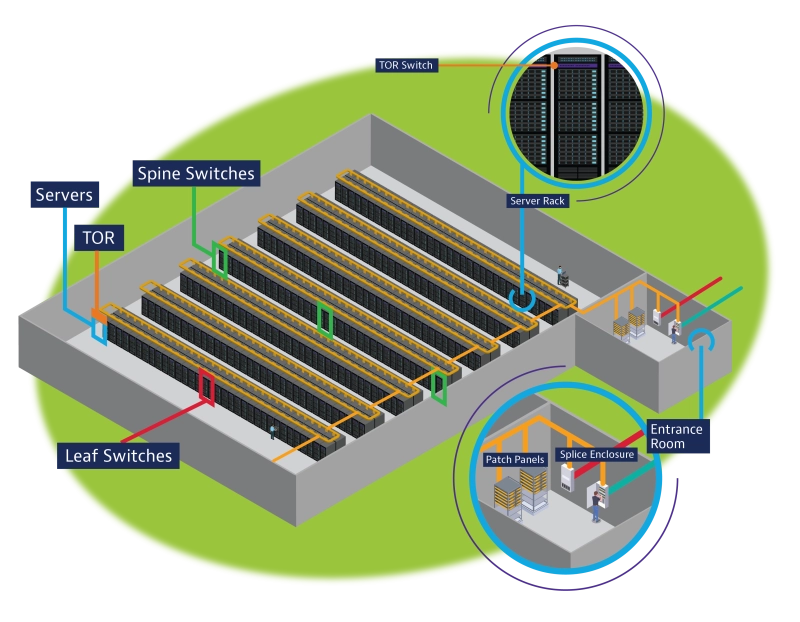

- A single hyperscale data center may include hundreds of miles of fiber optic cable to connect the servers used for data storage, networking, and computing.

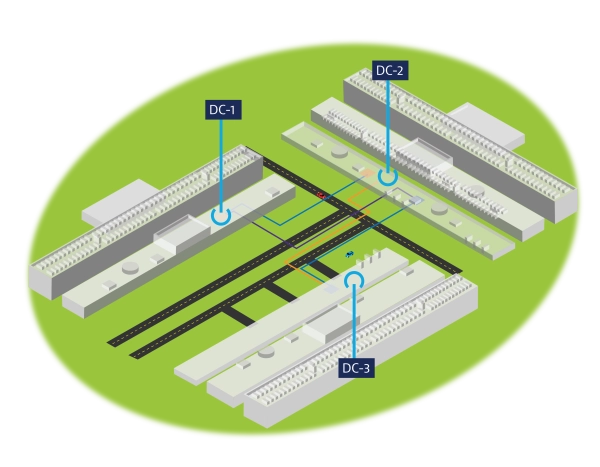

- Data center interconnects (DCIs) link hyperscale data centers to one another. Proactive DCI testing during installation is essential for identifying sources of latency and other performance issues.

- Software defined networking (SDN) is often incorporated, along with the hyperscaler’s own unique software and hardware technologies.

- Horizontal scaling (scaling out) is accomplished by expanding or adding more hardware to the data center.

- Vertical scaling (scaling up) increases the power, speed, or bandwidth of existing hardware.

- There are over 600 hyperscale data centers in operation worldwide today, and this number continues to grow.

Hyperscale data centers allow internet content providers (ICPs), public cloud deployments, and big data storage solutions to deploy new services or scale up quickly, making them highly responsive to customer demand. The immense data center size also leads to improved cooling efficiency, balanced workloads among servers, and reduced staffing requirements. Additional benefits include:

- Reduced Downtime: The built-in redundancies and continuous monitoring practices employed by hyperscale data center companies minimize interruptions in service and accelerate issue resolution.

- Advanced Technology: Best-in-class server and virtual networking technologies along with 400G-800G Ethernet DCI connections lead to ultra-fast computing and data transport speeds, high reliability levels, and automated self-healing capabilities.

- Lower CAPEX: Hyperscale customers benefit from lease or subscription models that eliminate upfront hardware and infrastructure costs and enable the computing needs of their business to be flexibly scaled up or down.

Drawbacks of Hyperscale Data Centers

With hyperscale data center architecture relying on size and scalability, resource shortages including land, materials, labor, and equipment can quickly derail their growth. These challenges can be more severe in underdeveloped or remote regions with less available workers, utilities, and roads.

- Hyperscale construction schedules are compressed by the increased demand for internet content, big data storage, and telecom applications. The addition of 5G, the IoT, and intelligent edge computing centers adds to this burden. These pressures can lead to minimized or omitted pre-deployment performance and fiber testing, and more problems or issues discovered after commissioning.

- Supply chain issues for hyperscale data centers are complicated by customization and the early adoption of new hardware and software technologies. High volume production coupled with short lead times creates challenges for many suppliers.

- Rapid evolution of technology to enhance performance can also become a drawback. The speed of advancement accurately predicted by Moore’s Law forces hyperscale data center companies to refresh hardware and software infrastructure almost continuously to avoid obsolescence.

Key Factors to Consider

Configuration options for hyperscale data centers continue to multiply in the age of disaggregated, virtual networks, and edge computing. The fundamental constraints common to all hyperscale data centers influence their long-term efficiency, reliability, and performance.

- Site location is among the most important factors when it comes to planning. With new hyperscale installations topping two million square feet, the cost and availability of real estate vs the desirability of the location and availability of resources must be balanced. Improved automation, machine learning, and virtual monitoring are allowing remote global locations with inherent cooling efficiencies to become viable options.

- Energy source availability and cost are primary considerations, with some data center peak power loads exceeding 100 megawatts. Cooling alone can account for up to half of this budget. Redundant power sources and standby generators ensure the “five nines” (99.999%) reliability sought by hyperscale data center companies. On premise or nearby renewable energy sources are also being pursued to reduce CO2 emissions from hyperscale data center power consumption.

- Security concerns are magnified by the size of the hyperscale data center. Although proactive security systems are an essential part of cloud computing, a single breach can expose huge amounts of sensitive customer data. Improving visibility both within and between hyperscale data centers to ward off potential security threats is a vital objective for network managers and IT professionals.

Hyperscale data center architecture differs from traditional data centers beyond the sheer size and capacity. Modular, configurable servers with centralized (UPS) power sources improve efficiency and reduce maintenance. Cooling systems are also centralized, with large fans or blowers used to optimize temperature levels throughout the facility. At the software level, virtualization processes like containerization enable applications to move quickly between servers or data centers. Disaggregation and edge computing in hyperscale data center architecture have made high-speed 400G and 800G Ethernet transport testing essential.

Enterprise vs Hyperscale Data Center

Enterprise data centers are owned and operated by the companies they support. These centers originated as small on-the-premises server rooms to support a specific company location. Consistent growth in traffic, storage, and computing requirements have now driven many enterprise data centers into hyperscale territory. This change applies to the increased size of enterprise data center deployments, as well as dispersed locations, energy-efficient designs, and increased use of self-healing automation. Despite this convergence, higher fiber density across the network is typically found in hyperscale data centers.

Colocation vs Hyperscale Data Center

As hyperscale data center solutions evolve to meet growing customer requirements for capacity and performance, colocation has become an increasingly popular approach. The colocation model allows data center owners to lease their available space, power, and cooling capacity to other organizations. In some cases, design services, IT support, and hardware are also procured by the tenant company. This allows smaller companies to realize the benefits of a hyperscale data center while avoiding the ground-up investment and time.

Hyperscale Data Center Design

The size and complexity of hyperscale architecture drives a top-down approach to hyperscale data center design. Short and long-term requirements for memory, storage, and computing power ripple through hardware specification and configuration, software design, facility planning, and utilities. The role of the data center within a campus setting and other interconnection requirements must also be carefully considered.

Test Planning during the design phase can prevent construction delays and reduce instances of service degradation post-deployment. Important considerations include MPO-native fiber link testing and certification, high-speed optical transport network (OTN) testing, and Ethernet service activation. Early incorporation of Observability tools and network traffic emulation can further safeguard ongoing performance.

Hyperscale Data Center Power Consumption

With internet traffic increasing ten-fold in less than a decade and data centers already consuming approximately 3% of the world’s electricity, more focus has been placed on hyperscale data center power consumption.

- Network function virtualization to unseat active electronics and artificial intelligence to intelligently control server and optical power levels have already created significant efficiency improvements.

- The advent of lights out (unmanned) data centers assisted by 5G enabled IoT monitoring processes will enable more hyperscale data centers to be deployed in colder, remote locations (such as Iceland) with built-in cooling benefits.

- As the energy consumption increases, a shift from fossil fuels to renewable sources such as solar, wind, and hydroelectric power will reduce the overall environmental impact of hyperscale data centers.

Many leading cloud computing companies and data center owners, including Google, Microsoft, and Amazon, have pledged climate neutrality by 2030, while others have already accomplished this goal.

The Future of Hyperscale Data Centers

New technology and applications will continue to drive the demand for testing and computing into the next decade and beyond. As the monetization opportunities presented by 5G and the IoT expand, new entrants will create applications that further challenge density, throughput, and efficiency limits.

As data centers increase in size, they will also become more distributed. Smaller edge computing centers move intelligence closer to the users while reducing latency and susceptibility to large scale distributed denial of service (DDoS) attacks. The trend toward distributed networks led by Google, Amazon (AWS), and other hyperscale leaders means more data centers will be interconnected through immense fiber networks, as each provider expands their reach and base to improve service levels.

Data center consolidation and colocation will continue to drive interoperability and reduce barriers to entry. Fortunately, these consolidated hyperscale locations are also well equipped to employ green technologies such as liquid cooling, solar rooftops, and wind turbines, along with advanced AI to optimize cooling and power consumption.

Hyperscale computing is truly a digital global phenomenon, with a growing proportion of new footprints appearing in Asia, Europe, South America, and Africa as the building boom continues. Massive new submarine cables (submerged DCI) connecting these diverse geographies, and perhaps even new data centers, will be deployed on the ocean floor.

As the technology evolves, VIAVI continues to draw upon experience, expertise, and end-to-end (e2e) involvement to help our customers. Our hyperscale data center solutions are used for planning, deployment, and management of discrete elements, from conception in the lab to servicing in the field, and beyond.

Hyperscale data center growth in size, complexity, and density means accelerated construction timelines, DCIs running close to full capacity, and visibility challenged by the increased speed and quantity of fiber connections. VIAVI test and monitoring solutions developed for conventional data centers have evolved to produce the industry’s most comprehensive hyperscale data center test suite.

- Fiber Certification: In addition to efficient and reliable fiber inspection to prevent compromised throughput and performance, tier 1 fiber certification to verify loss levels and polarity should be completed in conjunction with tier 2 certification to pinpoint the sources and locations of optical return loss through OTDR testing. VIAVI provides advanced, automated, fiber certification and characterization solutions designed specifically for MPO interfaces and high-density fiber environments.

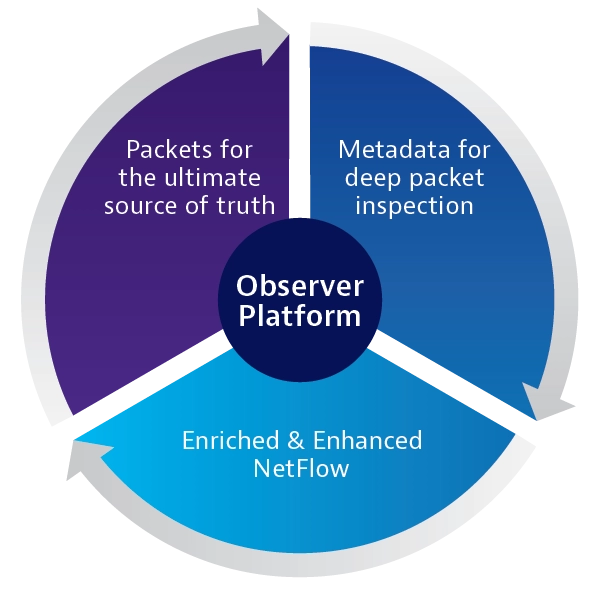

- Monitoring: Remote monitoring addresses the trend towards lights out hyperscale data center design. The SmartOTU fiber monitoring solution provides instant alerts when performance degradation or fiber-tapping is suspected, while the ONMSi Remote Fiber Test System (RFTS) performs ongoing OTDR “sweeps” to accurately detect and predict fiber issues throughout the network. The MAP-2100 solution performs important bit-error rate (BER) testing for unmanned data centers.

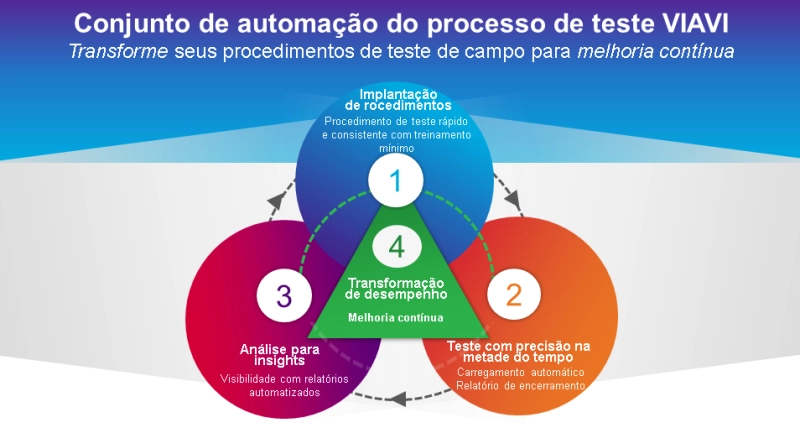

Test Process Automation: Automation has provided a gateway to improved hyperscale data center performance and reliability along with test efficiency and consistency. Test process automation (TPA) applied to fiber inspection, certification, and network monitoring workflows accelerates test and reporting cycles, reduces training requirements, and improves alignment between technicians and customers.

Hyperscale data centers continue to grow in size and complexity, even as 5G and the IoT drive network disaggregation and push intelligence to the edge. VIAVI helps operators and providers to get the most from the hyperscale ecosystem and the services it carries so they can turn up infrastructure, successfully scale, and profitably innovate—today and tomorrow.

With an unmatched breadth and depth of interoperable test products and expertise, VIAVI ensures levels of service and reliability that meet the high standards of hyperscale data center operators and service providers. Leveraging decades of experience and collaboration, VIAVI test solutions optimize optical hardware, fiber, and network performance over the lifecycle of the hyperscale data center, from lab to turn-up to monitoring.

Automated fiber certification, MPO connector inspection, high-speed 400G or 800G throughput testing, and virtual service activation and monitoring are among the advanced test capabilities designed to keep up with unceasing hyperscale data center growth. Testing and assurance within the data center extends outward to data center campuses and large-scale metro, along with global and distributed data center networks and their interconnects.